Social media sites like Twitter and Facebook, being user-friendly and a free source, provide opportunities for people to air their voices. People, irrespective of age group, use these sites to share every moment of their lives, making these sites flooded with data. Apart from these commendable features of social media, they also have downsides as well. Due to the lack of restrictions set by these sites for their users to express their views as they like, anyone can make adverse and unrealistic comments in abusive language against anybody with an ulterior motive to tarnish one’s image and status in society. A conversational thread can also contain hate and offensive content, which is not apparent just from a single comment or the reply to a comment, but can be identified if given the context of the parent content. Furthermore, the contents on such social media are spread in so many different languages, including code-mixed languages such as Hinglish. So it becomes a huge responsibility for these sites to identify such hate content before it disseminates to the masses.

A conversational thread can also contain hate, offensive, and profane content, which is not apparent from a standalone or single tweet or comment or the reply to a comment, but can be identified if given the context of the parent content.

The above screenshot from Twitter describes the problem at hand effectively. The parent/source tweet, which was posted at 2:30 am on May 11th, expresses hate and profanity towards Muslim countries regarding the controversy happening during the recent Israel-Palestine conflict. The 2 comments on the tweet have written "Amine", which means trustworthy or honest in Arabic. If the 2 comments were to be analyzed for hate or offensive speech without the context of the parent tweet, they wouldn’t be classified as hate or offensive content. But if we take the context of the conversation, then we can say that the comments support the hate/profanity expressed in the parent tweet. So those comments are labelled as hate/offensive/profane.

This sub-task focused on the binary classification of such conversational tweets with tree-structured data into:

The sampling and annotation of social media conversation threads is very challenging. We have chosen controversial stories on diverse topics to minimize the effect of bias. We’ve hand picked controversial stories from the following topics that have a high probability of containing hate, offensive, and profane posts.

The controversial stories are as follow:

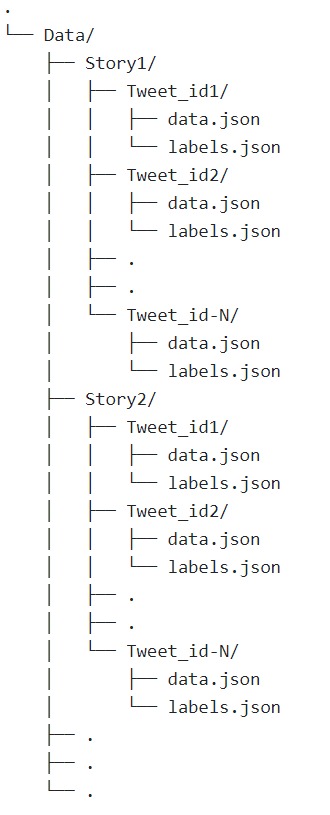

The directory structure of data directory :

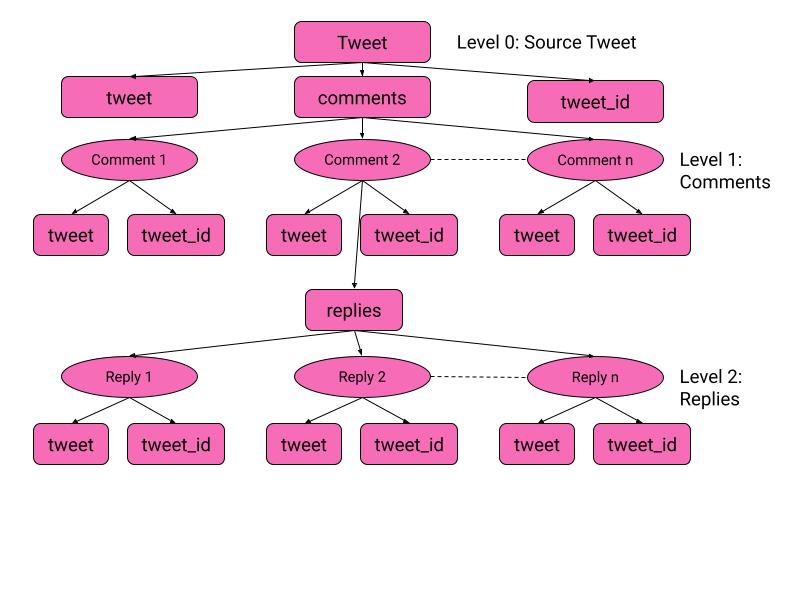

The rectangles are keys and ovals are elements of array represented by the parent key.

The structure of labels.json is linear. labels.json contains no nested data structure. It only contains key-value pairs where the key is the tweet id and value is the label for the tweet with the given tweet id.

We understand that FIRE hosts so many beginner friendly workshops every year and this problem might not seem like beginner friendly. So, we’ve decided to provide participants with a baseline model which will provide participants with a template for steps like importing data, preprocessing, featuring and classification. And the participants can make changes in the code and experiment with various settings. The code for baseline model, click here

Note: baseline model is just to give you a basic idea of our dir. structure and how one can classify context based data, there are no restrictions on any kind of experiments

We are a thankful anonymous reviewer of Expert System and application who inspired us to formulate this problem during the reviewing process of our paper Modha et al. 2021.

Subscribe to our mailing list for the latest announcements and discussions.

For any queries write to us at hasoc2019@googlegroups.com